Data Pipeline

What is Data Pipeline?

Understanding Data Pipelines: Types, Formats, Tools, Industries, and Use Cases for On-Premises and Cloud Environments

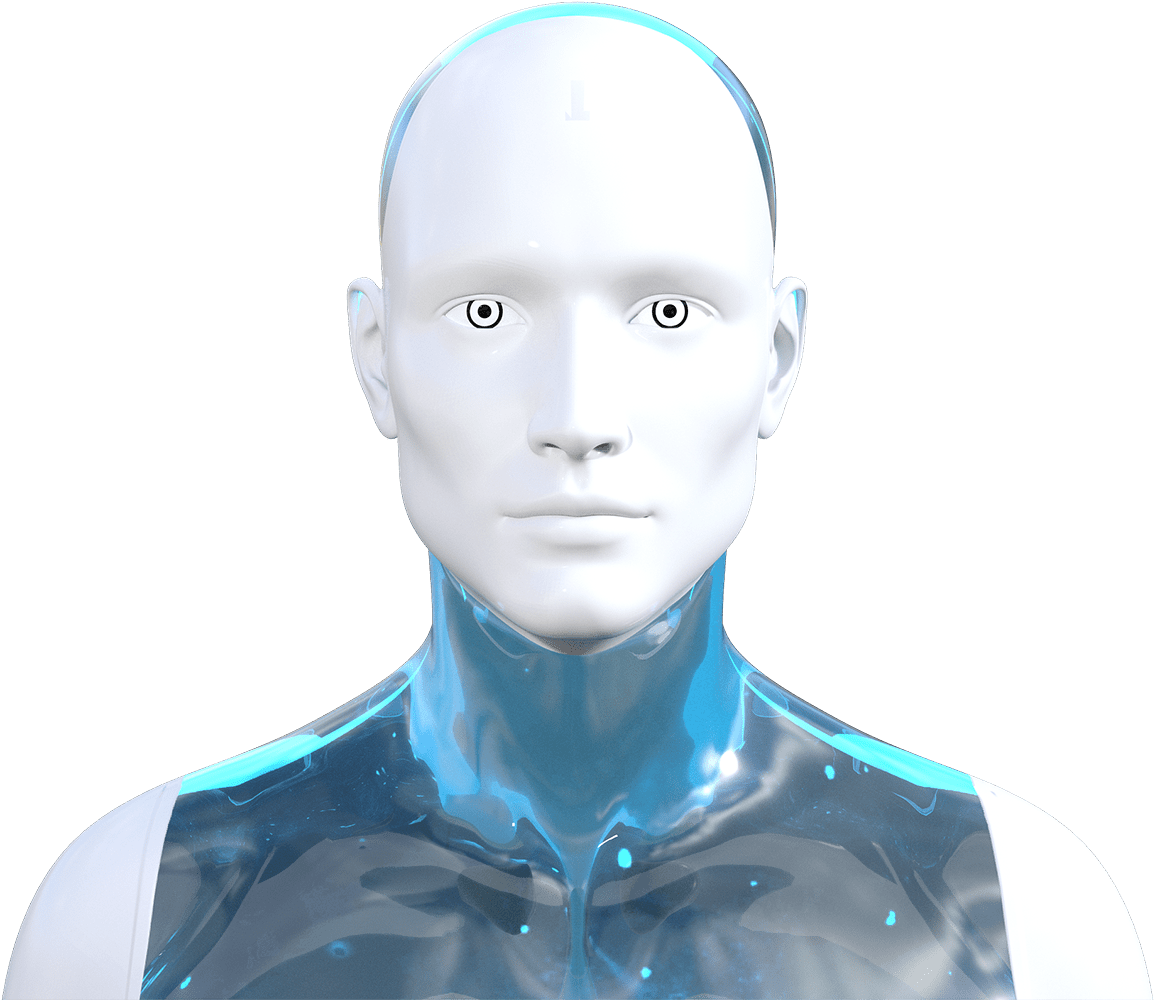

Data pipelines are essential components of modern data architecture. They enable the seamless flow of data from various sources to destinations, ensuring it is processed, transformed, and made available for analysis. This article provides an overview of different types of data pipelines, common data formats, tools available for data ingestion in both on-premises and cloud environments, and key industries with relevant use cases.

Types of Data Pipelines

Data pipelines can be broadly categorized into two main types:

Batch Data Pipelines

Batch pipelines process data in chunks or batches at scheduled intervals. These pipelines are suitable for workloads where real-time processing is not critical, such as daily reports or aggregating historical data.

Use Cases:

Generating daily or weekly business reports.

Processing large datasets for machine learning model training.

Data warehousing for historical analysis.

Examples of Batch Processing Tools:

Apache Spark

Apache Hadoop

Azure Data Factory

Real-Time (Streaming) Data Pipelines

Real-time pipelines process data as it is generated, enabling near-instantaneous insights. These pipelines are critical for scenarios where timely data is crucial.

Cases:

- Fraud detection in financial transactions

- Real-time monitoring of IoT devices

- Dynamic pricing in e-commerce

Examples of Real-Time Processing Tools

- Apache Kafka

- Apache Flink

- Google Cloud Dataflow

Common Data Formats in Data Pipelines

Data pipelines need to handle data in various formats, depending on the source system and the nature of the data. Below are some commonly used data formats:

- CSV (Comma-Separated Values)

CSV is a simple, text-based format where data fields are separated by commas. It is widely used due to its simplicity and compatibility with many tools.

- JSON (JavaScript Object Notation)

JSON is a lightweight data-interchange format that is easy to read and write. It is commonly used in web APIs and NoSQL databases.

- XML (eXtensible Markup Language)

XML is a markup language that defines a set of rules for encoding documents. It is used for data exchange in enterprise applications.

- XLS/XLSX (Microsoft Excel Files)

Excel files are commonly used in business environments for storing structured data. Pipelines often need to extract data from these files for further processing.

- APIs (Application Programming Interfaces)

APIs often provide real-time data in structured formats like JSON or XML. They are a key source of data in modern pipelines.

Tools for Data Ingestion

Data ingestion is the first step in a data pipeline. Various tools are available for ingesting data from multiple sources in both on-premises and cloud environments.

On-Premises Data Ingestion Tools

- Apache Nifi

A powerful data integration tool that supports real-time and batch ingestion.

- Talend

Offers a suite of tools for data integration, transformation, and ingestion.

- Informatica PowerCenter

A popular enterprise-grade tool for data integration and ETL.

Cloud Data Ingestion Tools

- AWS Glue

A fully managed ETL service that makes it easy to prepare and load data for analytics.

- Azure Data Factory

A cloud-based data integration service for creating ETL and ELT pipelines.

- Google Cloud Dataflow

A unified stream and batch data processing service.

- Databricks

A data engineering platform that supports both real-time and batch processing.

Hybrid Tools

Some tools are designed to work seamlessly across on-premises and cloud environments:

- Apache Kafka

A distributed event streaming platform that supports hybrid deployments.

- StreamSets

Provides a data operations platform for building smart data pipelines.

Key Industries and Use Cases for Data Pipelines

Data pipelines play a crucial role across various industries. Below are key industries and examples of how data pipelines are utilized:

- Finance

- Fraud detection using real-time data pipelines.

- Aggregating transactional data for risk assessment.

- Regulatory reporting and compliance.

- Healthcare

- Real-time patient monitoring using IoT devices.

- Batch processing of medical records for research and analysis.

- Data integration from various healthcare systems for a unified patient view.

- Retail and E-Commerce

- Dynamic pricing based on real-time market trends.

- Inventory management and demand forecasting.

- Customer behavior analysis for personalized marketing.

- Manufacturing

- Monitoring production lines in real-time for anomalies.

- Predictive maintenance using machine sensor data.

- Supply chain optimization through batch data analysis.

- Media and Entertainment

- Real-time content recommendation engines.

- Analyzing viewer behavior to improve content strategy.

- Ad targeting and campaign performance analysis.

- Telecommunications

- Network performance monitoring and optimization.

- Customer churn prediction through historical data analysis.

- Real-time billing and usage monitoring.

Key Considerations for Building Data Pipelines

When designing data pipelines, it is important to consider several factors:

- Data Volume: The amount of data to be processed can affect the choice of tools and architecture.

- Latency Requirements: Real-time applications require low-latency pipelines, while batch processing can tolerate higher latency.

- Data Variety: Pipelines must handle diverse data formats and sources.

- Scalability: The pipeline should scale to accommodate future data growth.

- Reliability: Ensure data integrity and fault tolerance through error handling and retry mechanisms.

Conclusion

Data pipelines are vital for modern data-driven organizations. Whether it's batch or real-time processing, selecting the right pipeline type, data format, and tools is crucial for building efficient data workflows. With numerous tools available for both on-premises and cloud environments, businesses can tailor their data pipelines to meet specific requirements. Furthermore, industries such as finance, healthcare, retail, and manufacturing leverage data pipelines for critical use cases, driving innovation and operational efficiency.